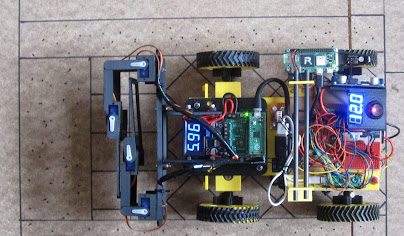

Simultaneous Locating And Mapping summary 16/Jan/2022 Actually, there’s not a lot of mapping, as we build the arena, so we hopefully know where everything is, but locating the robot within the arena is a big deal in PiWars 2022 as there is a lot more stuff about than in 2021.

General concept: stereo cameras and beacons.

Beacons

The logic chain ...

- You need identifiable landmarks in a known location.

- How do you pick them out from the background clutter? If you use LED beacons then you can drastically underexpose the image, leaving only the LEDS showing.

- How do you identify them? Use different colours.

- Why not a modulation? Because you have to do this fast on a moving platform, you can’t afford the time to observe the beacon over a time period to see changes.

- What colours? Well, it turns out that the obvious RGB colours have a problem, which is that the Green is too close to the Blue for rapid distinguishing, so just Red and Blue then.

- How high? First guess was on the ground with the cameras underslung (leaving the robot top completely clear for attachments). But what about the sheep and troughs obstructing the view, let alone attachments hanging down? So current guess is 110mm up. That means we can have the cameras on the back of the robot unobstructed.

- What if that’s wrong? They are mounted on 8mm square section carbon fibre tube, so if we need them higher up, we just use longer tubes.

- What kind of LEDs? First we chose RGB LEDs. This means that if we change our minds about colours we can just solder in some new resistors and get any colour we like. We started out with clear 5mm LEDs with 3D printed HD Glass diffusers, but why make work for yourself when you can get 10mm diffused LEDs?

- How many LEDs? Given just two colours and four LEDs you get 16 combinations. Each arena wall has a maximum of seven LEDs (if you include the corners) so can then have a unique pattern of beacons. If we need each beacon to be unique in the whole arena we will have to go to three colours or five LEDs

They are powered at 9V, so could use PP3s, hence the little box at the base

Beacon identification software

First thought, use OpenCV for both image capture and processing. It’s a bit worrying that it takes 25 seconds to load (not to mention 5 hours to install), but runtime is lightning fast and the loading takes place before the timed run, so should not be a real problem. So we start with a pair of 640x480x3 RGB images (possibly on different computers) captured with OpenCV.

We can get 29 frames per second (FPS) capturing stereo pairs on a single computer (the Stereo Pi). However, it turns out that we can process them in a very basic way just with numpy and get a calculation ‘frame rate’ of 1880 FPS, so simple image processing has no real effect on performance. The killer is reliability. OpenCV just doesn’t control the camera hardware properly. This means that every now and then the image goes green monochrome or the GStreamer is incorrectly invoked. Even after weeks of trying I cannot resolve this, so it’s PiCamera and NumPy for now.

Phase 1

The base image is 640 columns wide, 480 rows high, and with 3 colours (RGB)

Locating beacons

This is done by just looking for at least 5 consecutive bright columns in the image to make a column set.

Measuring the Angle

The dreadful barrel distortion of the lens is compensated for by a cosine formula determined experimentally from calibration images. This is then used to create a lookup table to convert the column number of the middle column to an angle, i.e. the bearing from the camera.

Locating LEDs

Look for at least 3 consecutive bright rows in a column set. Note that the LEDs are separated by quite thick separators so that they don’t run into one another in the image. Produces a set of rectangles in the image.

Determining the colours

Because we only have red and blue, we just sum those colours in an LED rectangle; if there’s more red than blue, it’s a red LED, otherwise it’s blue. Note that using OpenCV and YUV encoding we may be able to reliably distinguish green as well, but can’t do that currently.

Identifying the beacons

We have a database of beacons and their colour codes, so RBBR is a beacon with, from the top, Red, Blue, Blue, and Red, LEDs. The database records their location in arena coordinates (garage is 0,0)

The end result of Phase 1 is a set of beacon identifiers and angles. These are written to a database (currently on the PI Zero, but eventually will be on the central Pi)

Phase 2 - Getting a Fix

Choosing the bearings

From the bearings table we choose those ones to use. We want bearings of the same beacons from both cameras taken at the same time. From those we want the pair of beacons furthest apart to get the best angles, so from those beacons which occur in both images we choose the leftmost beacon and the rightmost beacon.

Calculating the position

This is some trigonometry, using the cosine rule and the sine rule. The result is the location of the beacons relative to the robot. Translating the co-ordinate systems we calculate the location of the robot relative to the arena.

Next ...

PID control of motors using the location delivered above (PID = Proportional Integral Derivative). Planned path will be a series of locations (arena x,y co-ordinates), plus angle (orientation of the robot relative to the arena).

Performance

Cameras

The basic picamera is a very cheap device using a tiny plastic lens. It has bad barrel distortion and you might think that we have to do a complex correction grid, but actually, because of the very specific use case a fairly straightforward correction does the job. So long as the camera sensor is absolutely vertical and at exactly the same height as the middle of the LED beacon the barrel distortion above and below the middle doesn’t affect it.

Timing

Obviously the calculation of location from a stereo pair of images taken from a moving vehicle is dependent on the two images being taken at the same time. Paula has done a study of synchronisation procedures which should solve the problem of clock differences. Because picamera capture cannot be directly triggered (you are picking up frames from a continuous video stream) some more work is required to convert clock synchronicity into camera synchronicity.

Basic Capture Frame Rate

Stereo Pi (= Pi 3), single camera, RGB, picamera

straight capture: 1.7 fps

capture using video port: 5.0 fps

Stereo Pi (= Pi 3), camera pair, BGR, OpenCV

capture using video port: 29.3 fps

Single Pi Zero 2 W, single camera, BGR, OpenCV

capture using video port: 61.7 fps

Geometry Frame Rate

from column compute location

using numpy 1880 fps

Accuracy

This is the big one. To avoid the need for supplementary location systems we need to get pretty close to 1mm accuracy. 10mm might be OK, but 100mm would be a waste of time. At present we are not near that, but there is time for more optimisation and calibration.